How I Program With LLMs

A snapshot of how I build today (Feb 2026)

This is a practical field report on how I build software with LLMs. It’s the workflow that’s been sustainable for me.

AI hype/anti‑hype cycles are loud and obnoxious. If you’re digesting information in the Twitter-verse or through LinkedIn feeds it’s hard to filter the signal from the noise. This document is grounded in my personal reality.

Core Tools

I lean on ChatGPT, Claude Code, and VS Code as my core tools. I briefly tried Cursor, but Claude Code fit into my workflow more smoothly. I’m experimenting with Codex again, but Claude Code has been faster in practice.

The Problem-Solving Loop

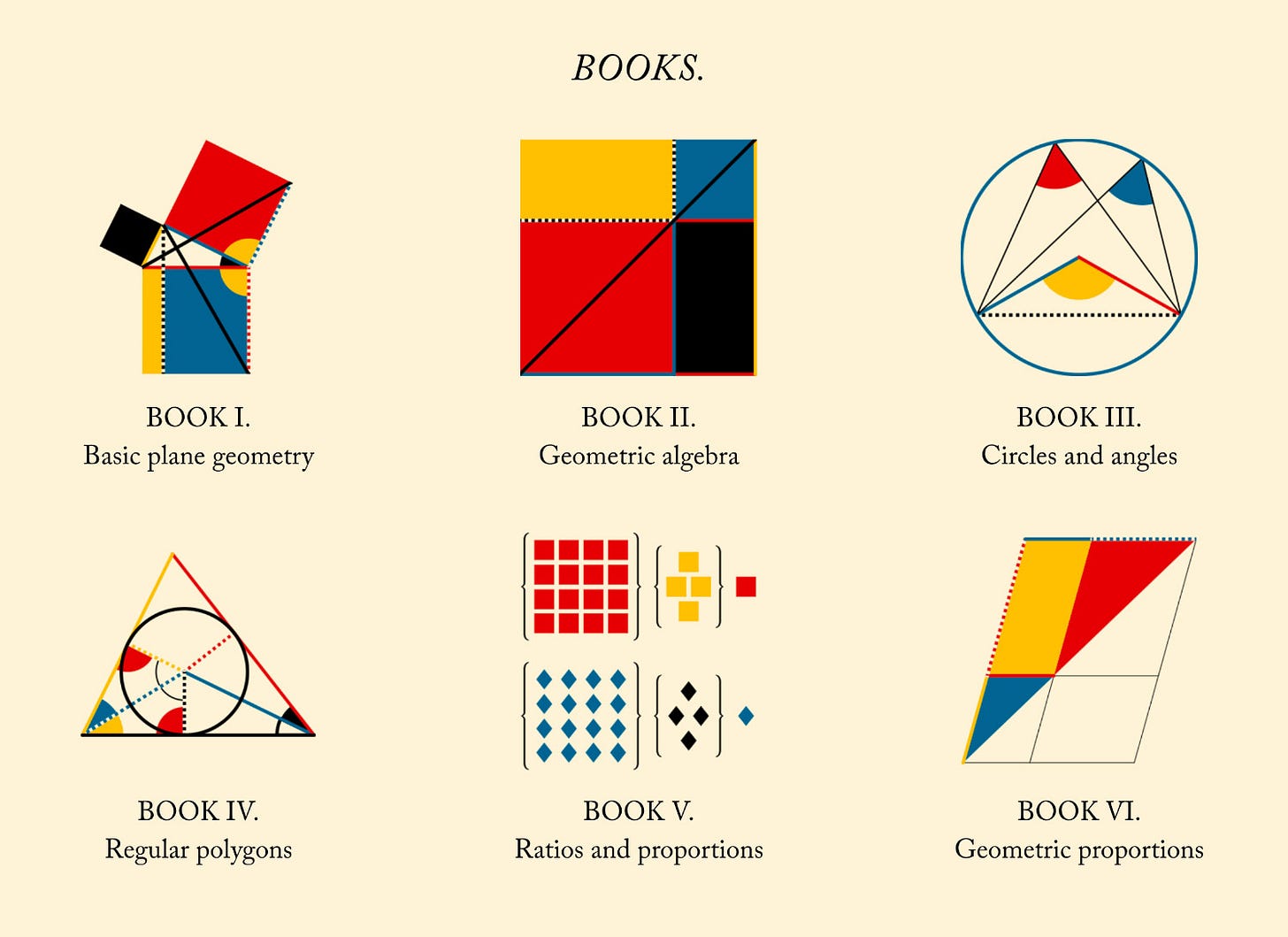

The backbone of everything is the classic problem‑solving loop. This is from one of my favorite books “How to Solve It” (1945).

The Framework

Identify the problem: Do I fully understand what I’m solving?

Devise a plan: Generate options, pick a path, and prioritize tasks.

Execute the plan: Do each step and check along the way.

Review the work: Validate results and look for alternative angles.

Everything below is how LLMs help me move through this loop faster.

Research and Defining the Problem

Steps 1 and 2 are the most important. Execution is straightforward once the problem is well‑defined and the plan is stable. Powerful tools don’t matter if you’re solving the wrong problems.

I use LLMs to explore the codebase, surface tradeoffs, and generate alternative approaches before I commit. When a part of the system is unfamiliar, I ask for a report: how it works, where the awkward parts are, and what a clean path forward looks like. For conceptual topics, I use ChatGPT research. Most of the time I use Claude Code since it has rich context of the codebase.

Dictation

I dictate more than I type. I use Spokenly with local speech-to-text transcription and Whisper models. Dictation is 3x faster than typing and removes a lot of low‑level friction. Rambling has a lower signal density than typing, but these models are strong at extracting intent and tightening it.

Planning & Executable Documents

I use JSON plan docs as artifacts for agents to execute against. Each task has a name, id, description, and acceptance criteria so the model can verify its own work. This has been one of my favorite changes. My workflow has flipped to repeatedly prompting “continue” and lightly steering the model if it drifts.

Example:

{

"project": "Reduce report load time to <2s",

"status": "pending",

"tasks": [

{

"id": "add-cache",

"status": "done",

"name": "Add cache for aggregates",

"description": "Introduce a Redis cache for report aggregates with a 10-minute TTL.",

"acceptance_criteria": [

"Cache key includes filters that affect results",

"Cache TTL set to 10 minutes",

"Cache hit/miss logged or observable"

]

},

{

"id": "update-api",

"status": "pending",

"name": "Update API response shape",

"description": "Adjust the API to return pre-aggregated stats in the new response format.",

"acceptance_criteria": [

"Response includes aggregate fields used by UI",

"Response schema documented or type-checked"

]

},

{

"id": "adjust-mapping",

"status": "pending",

"name": "Adjust UI mapping",

"description": "Update the dashboard to consume the new response shape without regressions.",

"acceptance_criteria": [

"UI renders same totals as before",

"No console errors or type errors"

]

}

]

}Execution — One task at a Time

I rarely ask for an entire feature at once. I ask for the next task, then pause. Long, unattended runs are harder to review and easier to drift.

The Harness

Type checks, linting, unit tests, and self‑reviews are the harness that makes delegation safe. I have the model run the harness after every task and fix issues immediately.

I don’t use browser automation like Playwright or Chrome DevTools yet. Hot reloading is fast enough for me to do UI reviews manually. I’m planning on testing this out once I feel more pain here.

Agent Self-Review

Before I look at changes, I always ask the model to review its own work. Claude Code’s /review skill is surprisingly good at catching mistakes. I still do human review, but this removes a lot of obvious noise.

Human Review Depth

My review depth varies by layer. The lower in the stack I go, the more I scrutinize the work. UI changes get skimmed. API and authentication paths get closer scrutiny. Infra and data‑model changes get the deepest review.

I don’t trust model output blindly, and I don’t think anyone should. It’s a popular social-media hook to say you don’t review code the models write. This comes off as pure engagement bait.

Pull Requests

I have agents write commits and PRs. They’re wordy, but they capture context from the implementation I typically might have skipped. I regularly trim the verbosity rather than rebuild the detail.

This also makes the commit log robust. I usually have Claude review the commit log to help generate Changelogs or internal team updates. This compounds over time.

Splitting Work into Manageable PRs

Large changes are common now, and large PRs are hard to review. I ask agents to split work into smaller PRs and create branches for each. This keeps human reviews sane and focused.

Debugging

When an alert is triggered or something breaks, I hand the error and context to Claude Code immediately. It can trace logs, code paths, and hypotheses way faster than I can. I still verify, but I don’t start from zero anymore.

Example: I was cooking dinner when a production “Excessive 500s” alert went off. I pointed Claude at our production Cloudwatch logs; it found the issue and had a fix in before the pasta was done.

Skills

I rely on reusable skills to standardize the problem-solving loop.

A skill for research + plan generation

A skill for executing a single task with the full harness

Claude Code’s

/reviewskill for self‑reviewI’m testing a /code‑simplifier skill to trim the excess output models are famous for

Async Work and Cloud Agents

My main work stays sequential and single‑threaded. I’ll spin up async tasks for side investigations in cloud environments like Claude Web so I don’t pollute my local branch. I tried git worktrees, but haven’t found a flow I like yet.

I’ve started using the Claude Code app on the subway or when I’m out of the house. Handing an agent a loose thought while I’m on the go is becoming addictive.

I keep imagining a future where I can go for a run and have Claude implement a project while I talk to it. Without a UI to view changes it seems hard to pull off, but the direction feels real.

APIs, Bash, and CLIs

Agents are naturally good with APIs and bash. I regularly point them at endpoints to test, debug, and run scripts. I use Postman far less now because I can have the model run curl directly in my CLI.

What I Don’t Do

MCPs

I might not be the right audience for MCPs. They seem like a crutch. MCP might lower the barrier for people who don’t want to manage API keys. These models are incredibly resourceful and great at writing scripts and using APIs. I’d rather handle API tokens directly and let the model build the tooling it needs.

Ralph Wiggum Scripts

I love the idea of fully autonomous execution loops, but it hasn’t worked for me yet. My workflow still needs light, continuous steering. Ralph works as a theoretical model for programming, but it hasn’t held up in practice.

Agent Swarms

I see multi-agent swarms all over Twitter, it sounds cool and I’ve tried them. In practice they fragment my attention and increase context switching, so the gains evaporate. I get the best results when I stay focused on one thing at a time.

Every hype cycle creates two extremes: the hype‑man and the hater. Both are loud. Both are unhelpful. Both conform to the internet machine and fill the airwaves with endless noise.

I’m trying to stay grounded in what’s working for me. If any of this helps you build faster or more clearly, it’s done its job.